Hifax: Hierarchically Flexible Access Control Scheme

1. Introduction

Any non-trivial computer system needs to control the access to the functionality and the services it provides and has to be able to regulate what its users can or cannot do with and within the system. Controlling users access to a system — through careful management of access rights given to them — is generally known as access control. Any design or methodology how this can actually be achieved, therefore, can be called as an access control scheme.

A good access control scheme should be very flexible both in terms of features(i.e. capabilities) it supports and also the ease with which it can be deployed and used. Features flexibility refers to the scheme allowing its administrators to manage permissions (i.e. what can or cannot be done) by a specific user or a group of users at a sufficiently detailed level. Though features flexibility is obviously a desired attribute in an access control system, greater flexibility may bring greater complexity and operational risk if the additional flexibility is something the users of the system cannot opt out easily — assuming they choose to do so — and are forced to adapt a "straight jacket" solution.

We refer to the ability to "pick-and-choose" the set of features of an access control scheme — such that the complexity and the cost of an implementation can be tuned by the set of advanced features actually being used — as deployment flexibility. A good access control scheme, therefore, is one which possesses both features and deployment flexibilities. It should allow detailed control over various aspects of the system if needed (e.g. user and group management, permissioning, etc.,) but it should also be possible to be used in a relatively simple fashion — for example just out of box deployment — by applications that don’t require fine access control.

In this paper, we describe the design of an access control scheme called Hifax (Hierarchically Flexible Access Control Scheme). Hifax aims to be very flexible both in terms of features it supports and the ease with which such features can be deployed and used. The design of Hifax is such that it gives system administrators fine control over managing user access permissions while at the same time allowing them to deploy it relatively easily and effortlessly in non-sophisticated deployment scenarios. By employing techniques such as hierarchical grouping and inheritance, the design of Hifax allows fine control over the entitlement management process (managing groups, roles, permissions, resources, etc.,) and ensures that the deployment complexity increases gradually only as more and more features are used.

2. Concepts

In this section, we describe the fundamental concepts and abstractions used in the design of Hifax. These concepts manifest themselves as the domain objects in the design of Hifax and directly follow from the observations from the problem domain (i.e. access control management)

2.1. User

A user is an actor that is going to "use" the system. A user could either be a human operator or a non-human entity (e.g. a bot, a process, etc.). Information about users is usually kept in an enterprise-wide directory system like Active Directory.

2.2. Group

Users can be members of the various groups. This helps with managing users who should be treated in the same way for a specific purpose like access control, system login expiry management, etc.,

Groups are hierarchically structured as follows:

-

Subgroups: A group is composed of zero or more users and zero or more other groups called subgroups. In other words, groups are hierarchical in nature. There is a parent-child relationship between a group and its subgroups where a subgroup is considered as a parent of the group that includes it. Conversely, the group that includes subgroups is considered as a child of them.

-

Sharing: A group could be a subgroup of many different groups. In other words, subgroups are shared, not owned, by the child group.

-

Recursive Inheritance: A group which includes another group indirectly (i.e. thorough its subgroups and their subgroups and so on) is said to be that group’s grandchild. The included group is said to be the grandparent of the including group.

-

Non-cyclicalness: A group cannot be its own grandparent. In other words, a group cannot be included in any of its subgroups as a child. This prevents cycles in group definition.

-

Banning: Just like users are added to a group, they could also be banned from it. Banning results in a user being removed from a group it is somehow — directly or indirectly through group inheritance — included in. Banning allows fine tuning of the group user membership.

-

Group User Graph: There is a special group called

RootGroupwhich is included by any other groups directly or indirectly. Users and groups form a directed acyclic graph (DAG) originating fromRootGroupcalled Group User Graph (GUG). -

GUG Nodes: Each node of

GUGis either a group or a user. User nodes are the leaf nodes in the graph and they are where any traversal from a root node will ultimately end at.

-

GEUS: The final set of users in a given root group — considering all the users in its subgroups recursively — is called group effective user set (GEUS).

2.3. Resource

A resource is any part of a system over which we want to impose some level of access control. A resource could be anything of significance in a system. Some examples of typical resources in a given computer system are:

-

Functions or segments of the code to execute in the system

-

GUI elements like menus to access, forms to fill in, buttons to click, etc.

-

System-wide operations like login, print, networking, etc.

-

Persistent entities like deal information, market data, user information, etc.

-

Commands or functions in the system (e.g. API elements)

Level of granularity used to designate resources depend entirely on the level of access control required. Finer granularity will enable finer access control but will come at the expense of increased complexity and higher cost of access control implementation.

The list of resources defined in a system forms an inventory as far the access control is concerned. Once the resources are defined, the rest of the system operates to control how and by whom those resources can be accessed.

Resources are named with a hierarchical naming convention to help with managing access to

resource hierarchies using pattern matching techniques.

This way, for example, the resource identifier API.Sales.* could refer to

the entire set of API functions provided for the sales module of a business application.

2.3.1. Resource Parameters

Each resource is associated with a set of name-value pairs called resource parameters. Some of the resource parameters are static (i.e. known at development time). Such parameters will be specified by hard-coding them in the source code. An example for static parameters is resource name: every resource has a name and a resource’s name is known at development time.

There could be other parameters of a resource that are only known at runtime. Such parameters are

called dynamic parameters.

As an example, consider a resource which corresponds to a data record representing

customer information in a sales management module of a business application.

The values of various fields like enrollment_date, company_name, geographical_region, etc., will only be known once

the record is loaded at runtime. Notice that although we know that the resource has fields such as a company_name at

development time, we cannot know the values any particular customer record can take for those fields and

hence if we want to a have an access

control scheme where we impose restrictions based on the value of those fields, we have to rely on the dynamic

parameters features of the resources.

2.4. Operation

Operations refer to the way a given resource is used in the system. There are five operation types:

-

Create: Operations involving adding a new item in the system (e.g. a new record, new definition, etc.).

-

Read: Operations involving accessing, reading and listing data entities.

-

Update: Operations involving changing data and entities in the system.

-

Delete: Operations involving deleting data, removing objects, etc.

-

Execute: Any operations involving some sort of execution (running functions, accessing APIs, etc.)

These operations are known as CRUDE. You will notice that the first four of them refer to persistence operations over database records or files, whereas the last one refers to executing code/functions in a system and accessing (viewing) user interfaces.

2.5. Permission

A permission defines the set of operations that can be carried out on either a specific resource or a family of resources as described a by resource regex. Permissions act like the link between resources and operations to be carried out on them.

Note that the access enforcing code (e.g. an API resource) does not know anything about permissions. Permissions are access control management abstractions. Enforcing code will only know about the resources it is guarding and the operations that are going to be attempted on them in the code that immediately follows, both of them being very related to and confined to the part of a system that is needed to be access controlled. A corollary to this fact is that permissions can be managed — i.e. new permissions being added, existing ones modified to change their resource and operations scopes, etc. — independently of the actual system that is access controlled. This enables a good degree of operational flexibility as far as access control is concerned.

A permission could either be given to (i.e. granted) or taken from (i.e. revoked) a user or a group.

Permissions are defined by specifying the following:

-

Name: Each permission is uniquely identified by a name string. Just like resources, permissions should be named using a hierarchical structure so that pattern matching techniques could be used to refer to a collection of them in one go.

-

Operations: A combination of C/R/U/D/E operations are that to be carried out on a resource. Operation types can be represented by different bits in a byte and hence can be combined into a byte to signal multiple operation types. For example, suppose we represent operation types as follows:

-

int OP_CREATE = 0x01 -

int OP_READ = 0x02 -

int OP_UPDATE = 0x04 -

int OP_DELETE = 0x10 -

int OP_EXECUTE = 0x20

Some of the composite operations that can be specified using the above encoding are:-

3 ⇒ CR operations (

OP_CREATE || OP_READ) -

15 ⇒ CRUD operations (

OP_CREATE || OP_READ || OP_UPDATE || OP_DELETE) -

31 ⇒ CRUDE operations (

OP_CREATE || OP_READ || OP_UPDATE || OP_DELETE || OP_EXECUTE)

-

-

-

Resource Regex: The regular expression string that corresponds to zero or more resources over which a permission will be granted or revoked. Recall that resources are named using hierarchical, URL like strings. By combining hierarchical nature of the resource name with the flexibility provided by regular expressions, we can refer to an entire family of resources with one string!

-

Control Script: This is an optional string composed of an expression which can be evaluated to

TrueorFalse. Hifax will assume that it evaluates toTrueif it is not provided, hence making it effectively optional. Control script will have access to contextual objects like current user, resource, etc.,

2.5.1. Permission Context

Any resource access is controlled by checking that the user has the necessary permissions to the resource in the question. These permission checks can be carried out using an object called permission context that provides the following information to the access control enforcing code:

-

Principal: The user who tries to access the resource. It is likely to be the logged-in user in the system.

-

Resource: Resource to be accessed. The checking code will be able to access all the parameters of the resource (both static and dynamic ones)

-

Operations: Operations to be carried out. This will be a combination of C/R/U/D/E operations on a given resource.

Above three pieces of key contextual information — principal(p)/resource(r)/operation(o) — is known as PRO.

2.5.2. Control Script

Control script of a permission allows fine tuning of access control process mechanism based on dynamic parameters

of resources to be controlled.

For example, suppose we want only users with the role "IBXTraders" to access deal data whose counterparty is "IBXBank".

This can be expressed as the following using the following hypothetical control script:

HasRole(p.username, "IBXTraders") and (r.counterparty == "IBXBank").

Without delving into too much detail, it is important to observe few things in the example above:

-

Context objects such as principal (p) and resource (r) have attributes that can be used in permission checking code

-

Complex expressions can be composed of using Boolean operators (and, or, xor, etc.) and parenthesis.

-

Some built-in functions such as

HasRoleis provided by Hifax runtime that could be useful in permission checking code

2.6. Role

A role is just a set of permissions. However, like groups, roles are hierarchically structured and include other roles as subroles.

Roles and permissions form a directed acyclic graph called RPG (Role Permission Graph) whose root node is a special role called RootRole.

2.7. User/Group and Permission/Role Isomorphism

Hierarchical structuring that governs the relationship between users and groups — as discussed in the section Group — also applies to the relationship between permissions and roles. In other words, roles are just hierarchically organized collections of permissions and supports grouping (i.e. subroles), sharing, recursive inheritance (i.e. ancestral roles), non-cyclicalness and banning (i.e. disallowing a permission).

The final set of permissions in a given role including all the subroles recursively is called role effective permission set (REPS). Notice how REPS is defined in a similar vein as its dual GEUS.

3. Principles

Having discussed the core concepts in the access control domain, we are ready to state the founding principles of Hifax. There are four fundamental architectural pillars over which Hifax stand on:

-

Flexible user management: Deals with the users (i.e. actors)

-

Flexible resource management: Deals with the resources (i.e. objects)

-

Flexible permission management: Deals with the permissions (i.e. actions)

-

Declarative access control enforcement: Deals with enforcing access control on the resources in a straight-forward way

We will explain these principles within the context of a concrete example. We will use a fictional business system called

X1Sys. X1Sys is a business application consisting of two modules (i.e. areas of functionality):

-

Sales: Deals with customers and selling products to them

-

Accounting: Keeps tracks of business transactions.

We have created some fictional data to use to illustrate the implementation of Hifax for a basic system like X1Sys.

3.1. Flexible User Management

Hifax supports a very flexible and highly scalable user management thanks to leveraging the group user graph to manage users and their groups.

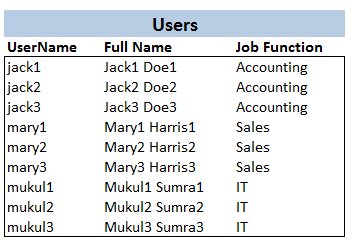

We will start with showing the list of users we have for X1Sys:

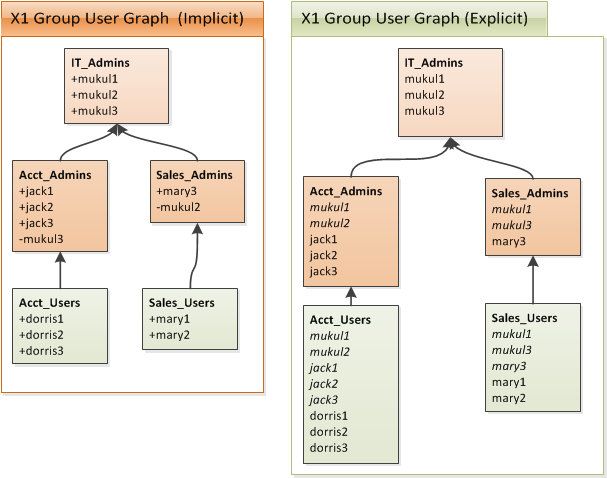

X1SysWe have users from three major departments in the organization: Accounting, Sales and IT. We can place these users into hierarchical groups to better manage them for access control — among other — purposes. Below you will find such a group organization:

X1SysThis diagram consists of two parts: implicit and explicit display of group/user membership for X1Sys.

On the left side, you see the definition of groups and on the right hand side you see the effective user list of

each group. Notice the following about this diagram:

-

Each group has a name and this is shown at the top of the group box in bold e.g.

IT_Admins. -

Each group could have directly added or removed user members. When a user is added, this is shown by prefixing the user’s username with

+sign, whereas when it is removed (i.e. banned) this is shown with-prefix. -

A group can inherit users from zero or more parent groups. Conversely, a group itself could be a parent of zero or more groups. Parent-child relationship is also known as subgroup-group relation. In the figure,

IT_Adminsis a subgroup of bothAcct_AdminsandSales_Adminsgroups. In other words,IT_Adminsis a parent of those two groups. It is also said to be a grandparent ofSales_Users. -

On the right hand side of the figure, you see the effective user sets of each group. These are the list of users that are composed after taking group inheritance and direct membership precedence rules in consideration. The algorithm for this is given in the section Group Effective User Set (GEUS) Computation.

3.2. Flexible Resource Management

Anything could be a resource in a Hifax implementation and resources are named using hierarchically structured URL-like strings. This allows referring entire families of resources using regular expressions.

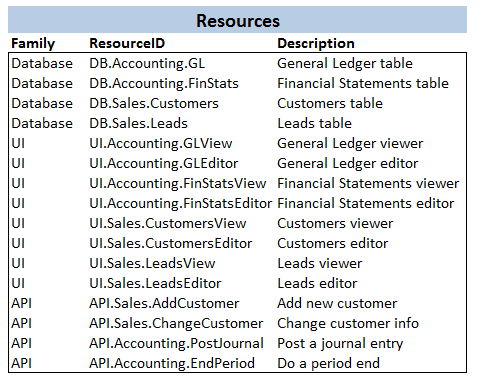

There are three major types of resources in X1Sys: database entities, UI screens and API functions.

These define the families of resources during the resource definition process and influence the hierarchical naming

convention when assigning resource identifiers to each resource. Below we show the resources defined for X1Sys:

X1SysAs noted in the concept section Resource, the level of granularity used to designate resources is entirely arbitrary and determines the level of access control desired in the system. In this case, we have chosen a reasonably fine granular approach of controlling access at screens, API functions and database entities levels.

3.3. Flexible Permission Management

Hifax provides a very flexible and highly scalable permissioning thanks to leveraging the role permission graph to provide a powerful role-based security model. This model is based on the ability to assign both specific permissions and roles to either groups or users directly.

Just like users can be organized into groups, permissions can also be organized into hierarchical roles.

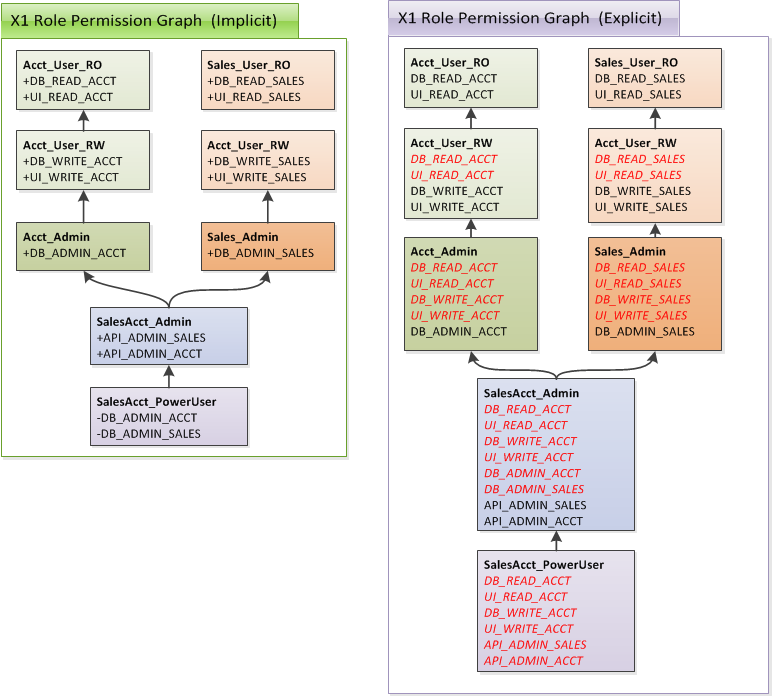

An example of such an organization is shown in the figure below for X1Sys:

X1SysYou will see the implicit and explicit (i.e. exploded) views of a role permission graph (RPG) defined for X1Sys.

Structural similarities between this picture and one shown for users/groups earlier are unmistakable. This is what

we meant when we were talking about isomorphism in the section User/Group and Permission/Role Isomorphism.

Notice that we have shown the inherited (i.e. indirect) permissions in a role with a italic red font in the above diagram. Directly defined permissions are shown with normal, black font.

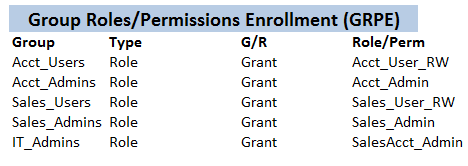

3.3.1. Group Roles/Permissions Enrollment (GRPE)

Both specific permissions and roles (i.e. a packaging of permissions) can be either given to or taken from (revoked) from a group. We refer to this process as group roles/permission enrollment and it works in a recursive fashion.

Group role enrollment information of X1Sys is shown below:

X1SysYou will notice that there is a strong similarity between group names and the roles usually assigned to them (e.g.

Sales_Admins group vs Sales_Admin role). Though following such a convention is usually an useful and convenient thing to do — as practiced heavily in functionally less limited access control schemes — there is absolutely no requirements — as

far as Hifax is concerned — to do so. In fact you see some examples of not following the said scheme in the diagram

(e.g. IT_Admins group is assigned SalesAcct_Admin role)

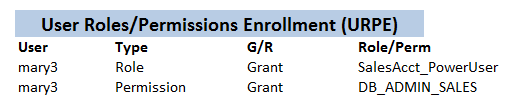

3.3.2. User Roles/Permissions Enrollment (URPE)

Both specific permissions and roles (i.e. a packaging of permissions) can be either given to or taken from (revoked) from a user. We refer to this process as user roles/permission enrollment and it works in a recursive fashion.

Moreover, a user will also get an effective set of permissions from the groups it belongs to. An example of this is shown in the following figure:

X1SysNotice how user mary3 has been given both the role SalesAcct_PowerUser and the permission DB_ADMIN_SALES.

This is to address the fact that SalesAcct_PowerUser role has been specifically created not to have that permission — as you can see in X1 RPG Graph with the explicit revoke operation — but we nevertheless want to give

the user the said permission.

3.4. Declarative Access Control Enforcement

Any part of a system that needs to be access controlled can do so by simply expressing the essential contextual

information — the resource to be guarded in question and the operations that are going to be carried out on them — to Hifax runtime. This contextual information is very specific to the part of the said system and does not

require knowing anything else — especially the access control related operational aspects of the system (i.e. its users and groups,

permissions, roles, etc.) — to function properly. This achieves a very good balance in terms of externalizing such operational aspects of the system as much as

possible so that they can be administered externally in a policy-driven fashion.

To illustrate how declarative access control enforcement works, let’s consider a specific part of X1Sys that we

designate as needing access control: the API function that does the accounting period end. We have earlier identified it as a

resource with the name API.Accounting.EndPeriod in X1Sys resource specification.

Imagine we have a function called AcctEndPeriod() in X1Sys codebase that provides the implementation of this API.

It will have an outline like the following:

1

2

3

4

def AcctEndPeriod (*args, **kwargs): (1)

CheckAccess("API.Accounting.EndPeriod", OP_EXECUTE) (2)

DoAcctPeriodEnd() (3)

-

API function (i.e. the resource) to be access controlled

-

Declarative access control enforcement, stating only the resource and operation to be carried out

-

Rest of the function implementation

Note how clean is the access control enforcement code above.

AcctEndPeriod implementation just uses a major function provided by Hifax runtime — CheckAccess() — and tells that function about what needs to be guarded (i.e. combination of resource and

operation). Everything else in the complex machinery of access control is handled by Hifax runtime in a

data-driven and policy-controlled manner within an operational environment externally managed by

the relevant operational staff.

4. Algorithms

In this section, we will provide the details of the algorithms such as GEUS, REPS, etc., which produce flattened-out (i.e. effective) sets of access control objects (e.g. users, permissions, etc.,). The use of these effective sets not only allows checking permissions correctly by means of ensuring all the contributing elements in a hierarchy are incorporated in the evaluation process but also enables this process to happen as efficiently as possible thanks to using methods such as caching.

In the following discussions, we will frequently be adding/removing items to/from sets. A set does not have duplicates and trying to add an element that already exists in a set is a no-op. Similarly, trying to remove an element that does not exist from a set is also a no-op. We will not repeat these points to keep the descriptions of our algorithms concise.

A important feature of the hierarchy we are dealing with in the access control domain is that we want lower levels

to have overriding effects over upper levels (i.e. grand parents). This way, user and role hierarchies can be set up

with generic parameters at the top, safe with the knowledge that they can be overridden in specific settings.

To that end, you will see the frequent use of a set called SeenNodes in the implementation of our algorithms.

The algorithms we provide are recursively defined and they start at the most specific node (level) in a particular

graph (e.g. GUG or RPG) and works their way up to the top of the graph. If we have already "seen" a particular node

in the graph (e.g. a role being revoked), that information should be reflected in the result of the algorithm even

if that node is encountered again in higher levels (e.g. said role was granted earlier). We achieve this by keeping track

of each unique node we process in SeenNodes set and ignore a given node if it is already in that set (i.e. we have

already processed it at a lower level of the graph).

A rather peculiar feature of the graphs we are dealing with is that we support both adding nodes to and removing nodes from our graphs. Moreover, we support the feature of overriding inheritance i.e. the lower levels of the graphs having the ability to determine the effective member set by virtue of either adding a new node or specifying the removal of a node (effectively banning it).

4.1. Group Effective User Set (GEUS) Computation

Problem: Given a group G, find all the users that are children and grandchildren (recursively) of that group.

Solution:

-

Initialize the sets

GEUSandSeenNodesto be empty. -

For each direct child

CofGdo the following:-

if

Cis inSeenNodescontinue with the next child. -

Otherwise add it to

SeenNodes-

If

Cis being added, add it toGEUS

-

-

-

For each parent node

PofGdo the following:-

If

PisRootGroupstop -

Otherwise recursively go to Step#2 with

PreplacingG

-

4.2. Role Effective Permission Set (REPS) Computation

Problem: Given a role R, find all the permissions included in that role directly or indirectly (i.e. in a recursive fashion)

Solution:

-

Initialize the sets

REPSandSeenNodesto be empty. -

For each direct permission

MofRdo the following:-

if

Mis inSeenNodescontinue with the next permission. -

Otherwise add it to

SeenNodes-

If

Mis being granted (i.e. added), add it toREPS

-

-

-

For each parent node

PofRdo the following:-

If

PisRootRolestop -

Otherwise recursively go to Step#2 with

PreplacingR

-

4.3. Group Effective Permission Set (GEPS) Computation

Problem: Given a group G, find all the permissions given to it directly or indirectly (i.e. in a recursive fashion)

Solution:

-

Initialize the sets

GEPSandSeenNodesto be empty. -

For each direct permission

MofGdo the following:-

if

Mis inSeenNodescontinue with the next permission. -

Otherwise add it to

SeenNodes-

If

Mis being granted (i.e. added), add it toGEPS

-

-

-

For each role

Rdirectly assigned toGdo the following:-

Let be

Sbe theREPSforR -

For each permission

MinSdo the following:-

if

Mis inSeenNodescontinue with the next permission. -

Otherwise add it to

SeenNodes-

If

Mis being granted (i.e. added), add it toGEPS

-

-

-

-

For each parent node

PofGdo the following:-

If

PisRootGroupstop -

Otherwise recursively go to Step#2 with

PreplacingG

-

4.4. User Effective Permission Set (UEPS) Computation

Problem: Given an user U, find all the permissions given to it directly or indirectly (i.e. in a recursive fashion,

considering all group memberships)

Solution:

-

Initialize the sets

UEPSandSeenNodesto be empty. -

For each direct permission

MofUdo the following:-

if

Mis inSeenNodescontinue with the next permission. -

Otherwise add it to

SeenNodes-

If

Mis being granted (i.e. added), add it toUEPS

-

-

-

For each role

Rdirectly assigned toUdo the following:-

Let be

Sbe theREPSforR -

For each permission

MinSdo the following:-

if

Mis inSeenNodescontinue with the next permission. -

Otherwise add it to

SeenNodes-

If

Mis being granted (i.e. added), add it toUEPS

-

-

-

-

For each parent group

GofUdo the following:-

Let be

Tbe theGEPSforG -

For each permission

MinTdo the following:-

if

Mis inSeenNodescontinue with the next permission. -

Otherwise add it to

SeenNodes-

If

Mis being granted (i.e. added), add it toUEPS

-

-

-

4.5. Permission Checking

Permission checking involves checking whether a given user has access to a particular resource to perform a specific operation. The user in question is usually the current user — known as the principal — and any permission checking is done against the permission set — known as principal effective permission set (PEPS) — he/she/it is entitled to. PEPS is simply the UEPS computed for the current user (i.e. logged on user).

Permission checking is initiated and driven by the code — i.e. parts of the client system that implement Hifax — that needs to enforce access control and has the following general outline:

-

Context Setup: Client code has to create a

PermissionContextobject which will have all the contextual parameters needed in permission checking:-

Principal: Details about the current (logged in) user who tries to access the resource. This will be known by Hifax — usually using the underlying operating system APIs to determine the logged on user — so it does not need to be explicitly set by the client code.

-

Resource: Details about the resource to be accessed. It has to be explicitly set by the client code as Hifax has no way to know which resource being accessed by the principal. It will include all static and dynamic attributes of the resource in question. Dynamic attributes such as persisted record fields have to be loaded by a system process before any access control could be performed with the principal’s credentials.

-

Operations: The types of operations — i.e. a combination of the C/R/U/D/E operations — to be carried out. Like the resource, this has to be explicitly set by the client code.

-

-

PEPS Creation: Hifax will compute the effective set of all permissions the principal is entitled to (i.e.

PEPS).PEPSis relatively expensive to create and is unlikely to change during a typical user session. Therefore it is ideal for pre-computing and caching as a part of login process. -

Permission Check: The client code will pass the

PermissionContextobjectpcto the Hifax functionCheckAccess(context). Hifax will go through each permission in thePEPSand try to see if it satisfies the access operation requested on the resource as specified inpc. This might require evaluating the control script — if the permission has such a script — of each permission inPEPS. If this process succeeds,CheckAccess(…)will simply end silently, resulting in the client code that invoked it as its first statement to proceed with the rest of its control flow as usual. Otherwise, it will throw an exception with the details of permission that failed to be satisfied and causes the normal control flow of the client code to be diverted — possibly to the client error reporting code that then can use the error message generated by Hifax for logging and user notification purposes — and ultimately causes the client code to fail.

5. Conclusion

We have presented an access control scheme that is structured around clearly defined abstractions and whose design is guided by well-founded principles. Architecture of Hifax allows great flexibility in terms of user and permission management thanks to the use of hierarchical inheritance schemes utilizing the power of directed acyclic graphs. We have provided fundamental algorithms that utilize those graphs to provide very powerful and flexible access control functionality.

We have also presented a simple deployment use case of how Hifax could be implemented in the context of a basic business application. As this use case shows — to some extent — , Hifax has the power to address the most complicated access control needs in an operationally efficient and clean way, while also making it possible to be deployed to less demanding environments in a cost effective way.

Appendix A: Acronyms

- DAG

-

Directed Acyclic Graph

- EPS

-

Effective Permission Set

- GEPS

-

Group Effective Permission Set

- GEUS

-

Group Effective User Set

- GRPE

-

Group Role Permission Enrollment

- GUG

-

Group User Graph

- REPS

-

Role Effective Permission Set

- RPG

-

Role Permission Graph

- RPE

-

Role Permission Enrollment

- PEPS

-

Principal Effective Permission Set

- UEPS

-

User Effective Permission Set

- URPE

-

User Role Permission Enrollment

- PRO

-

Principal/Resource/Operation

- CRUDE

-

Create/Read/Update/Delete/Execute

Glossary of Terms

- CRUDE

-

Create/Read/Update/Delete/Execute operations

- DAG

-

Directed Acyclic Graph

- Group

-

A set of users. Groups are hierarchically structured and have parent and child groups.

- GUG

-

Group User Graph. A DAG formed from users and groups.

- Operation

-

Basic actions that can be carried out on a resource by a user. It refers to C/R/U/D/E operations.

- Permission

-

A combination of a resource identifier (could refer to more than one resource) together with operations to be carried out on said resource(s)

- Principal

-

Currently logged on user

- Resource

-

Any entity in a computer system which needs to be access controlled e.g. GUI objects, database tables, API functions, etc.

- Role

-

A collection of permissions. Roles are hierarchically organized (i.e. they have parent and child roles).

- RPG

-

Role Permission Graph. A DAG formed from permissions and roles.

- User

-

Any actor in a computer system including both human and non-human actors